Using Web Queries and a Loop to Download 4000 Database Entries from 4000 Web Pages

September 26, 2004 - by Bill Jelen

One day, I received a broadcast e-mail from Jan at the PMA. She was passing along a great idea from Gary Gagliardi of Clearbridge Publishing. Gary mentioned that some search engines assign a page rank to a page based on how many other sites link to the page. He was suggesting that if all 4000 members of the PMA would link to all 4000 other members of the PMA, it would boost all of our rankings. Jan thought this was a great idea and said that all PMA member web addresses are listed on the current PMA website in the members area.

Personally, I think the "number of links" theory is a bit of a myth, but I was willing to give it a try in order to help out.

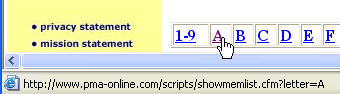

So, I visited the PMA Members area, where I quickly learned that there wasn't a single list of members, but in fact 27 lists of members.

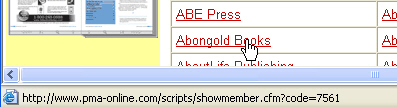

As I clicked through to the "A" page, I saw that it was even worse. Each link on this page did not lead to the member's website. Each link here lead to an individual page at PMA-online with the member's website.

This would mean that I would have to visit thousands of web pages in order to compile the list of members. This clearly would be an insane proposition.

Luckily, I am the co-author of VBA & Macros for Microsoft Excel. I wondered if I could customize the code from the book to solve the problem of extracting member URL's from thousands of linked pages.

Chapter 14 of the book is about using Excel for reading from and writing to the web. On page 335, I found code that could create a web query on the fly.

The first step was to see if I could customize the code in the book to be able to produce 27 web queries - one for each of the letters of the alphabet and the number 1. This would give me several lists of all the links on the 26 alphabetical page listings.

Each page has a URL similar to http://www.pma-online.org/scripts/showmemlist.cfm?letter=A. I took code from page 335 and customized it a bit to do 27 web queries.

Sub CreateNewQuery()

' Page 335

Dim WSD As Worksheet

Dim WSW As Worksheet

Dim QT As QueryTable

For m = 1 To 27

Select Case m

Case 27

MyStr = "1"

Case Else

MyStr = Chr(64 + m)

End Select

MyName = "Query" & m

ConnectString = "URL;http://www.pma-online.org/scripts/showmemlist.cfm?letter=" & MyStr

ThisWorkbook.Worksheets.Add

ActiveSheet.Name = m

' On the Workspace worksheet, clear all existing query tables

For Each QT In ActiveSheet.QueryTables

QT.Delete

Next QT

' Define a new Web Query

Set QT = ActiveSheet.QueryTables.Add(Connection:=ConnectString, Destination:=Range("A1"))

With QT

.Name = MyName

.FieldNames = True

.RowNumbers = False

.FillAdjacentFormulas = False

.PreserveFormatting = True

.RefreshOnFileOpen = False

.BackgroundQuery = False

.RefreshStyle = xlInsertDeleteCells

.SavePassword = False

.SaveData = True

.AdjustColumnWidth = True

.RefreshPeriod = 0

.WebSelectionType = xlSpecifiedTables

.WebFormatting = xlWebFormattingAll

.WebTables = "7"

.WebPreFormattedTextToColumns = True

.WebConsecutiveDelimitersAsOne = True

.WebSingleBlockTextImport = False

.WebDisableDateRecognition = False

.WebDisableRedirections = False

End With

' Refresh the Query

QT.Refresh BackgroundQuery:=True

Next m

End SubThere were four items that were customized in the above code.

- First, I had to build the correct URL. This was achieved by appending the proper letter to the end of the URL string.

- Second, I modified the code to run each query on a new worksheet in the workbook.

- Third, the code in the book was grabbing the 20th table from the web page. By recording a macro pulling in the table from PMA, I learned that I needed the 7th table on the web page.

- Fourth, after running the macro, I was disappointed to see that I was getting the names of the publishers, but not the hyperlinks. The code in the book specified .WebFormatting:=xlFormattingNone. Using VBA help, I figured that if I changed to .WebFormatting:=xlFormattingAll, I would get the actual hyperlinks.

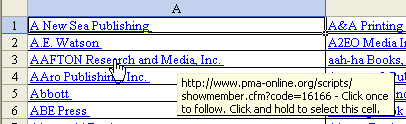

After running this first macro, I had 27 worksheets, each with a series of hyperlinks that looked like this:

The next step was to extract the hyperlinked address from every hyperlink on the 27 worksheets. It is not in the book, but there is a hyperlink object in Excel. The object has an .Address property that would return the webpage within PMA-Online with the URL for that publisher.

Sub GetEmAll()

NextRow = 1

Dim WSD As Worksheet

Dim WS As Worksheet

Set WSD = Worksheets("Sheet1")

For Each WS In ActiveWorkbook.Worksheets

If Not WS.Name = "Sheet1" Then

For Each cll In WS.UsedRange.Cells

For Each hl In cll.Hyperlinks

WSD.Cells(NextRow, 1).Value = hl.Address

NextRow = NextRow + 1

Next hl

Next cll

End If

Next WS

End SubAfter running this macro, I finally learned that there were 4119 individual webpages at the PMA site. I am glad that I did not try to visit each individual site one at a time!

My next goal was to have a webquery built to visit each of the 4119 individual web pages. I recorded a macro returning one of the individual publisher pages to learn that I wanted table # 5 from each page. I could see that the publisher name was returned as the fifth row of the table. In most cases, the website was returned as the 13th row. However, I learned that in some cases, if the street address was 3 lines instead of 2, the website URL was actually on row 14. If they had 3 telephones instead of 2, the website was pushed down another row. The macro would have to be flexible enough to search from perhaps row 13 to 18 in order to find the cell that started WWW:.

There was another dilemma. The code in the book allows the webquery to refresh in the background. In most cases, I would actually watch the query finish after the macro finished. My initial thought was to allow 40 rows for each publisher, and to build all 4100 queries on each page. This would have required 80,000 rows of spreadsheet and a lot of memory. In Excel 2002, I experimented with changing the BackgroundRefresh to False. VBA did a good job of pulling the information into the worksheet before the macro would go on. This allowed be to build the query, refresh the query, save the values to a database, then delete the query. Using this method, there was never more than one query at a time on the worksheet.

Sub AllQuery()

Dim WS As Worksheet

Dim WD As Worksheet

Set WD = Worksheets("database")

Set WS = Worksheets("Sheet1")

Dim QT As QueryTable

WS.Activate

OutCol = 8

OutRow = 1

FinalRow = WS.Cells(65536, 1).End(xlUp).Row

For i = 2 To FinalRow

ConnectString = "URL;" & WD.Cells(i, 12).Value

Application.StatusBar = i

' Save after every 500 queries

If i Mod 500 = 0 Then

ThisWorkbook.Save

End If

MyName = "Query" & i

' Define a new Web Query

Set QT = ActiveSheet.QueryTables.Add(Connection:=ConnectString, Destination:=WS.Cells(OutRow, OutCol))

With QT

.Name = MyName

.FieldNames = True

.RowNumbers = False

.FillAdjacentFormulas = False

.PreserveFormatting = True

.RefreshOnFileOpen = False

.BackgroundQuery = False

.RefreshStyle = xlOverwriteCells

.SavePassword = False

.SaveData = True

.AdjustColumnWidth = True

.RefreshPeriod = 0

.WebSelectionType = xlSpecifiedTables

.WebFormatting = xlWebFormattingNone

.WebTables = "5"

.WebPreFormattedTextToColumns = True

.WebConsecutiveDelimitersAsOne = True

.WebSingleBlockTextImport = False

.WebDisableDateRecognition = False

.WebDisableRedirections = False

End With

' Refresh the Query

QT.Refresh BackgroundQuery:=False

' Change from a live query to values

WS.Cells(OutRow, OutCol).Resize(40, 2).Value = WS.Cells(OutRow, OutCol).Resize(40, 2).Value

For Each QT In WS.QueryTables

QT.Delete

Next QT

' Copy to Database

WD.Cells(i, 1).Value = WS.Cells(5, 8).Value

For j = 13 To 20

CheckIt = WS.Cells(j, 8).Value

If Left(CheckIt, 3) = "WWW" Then

WD.Cells(i, 8).Value = CheckIt

End If

Next j

Next i

End SubThis query took more than an hour to run. After all, it was doing the work of visiting over 4000 web pages. It did run without a hitch and did not crash the computer or Excel.

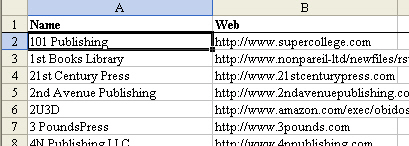

I then had a nice database in Excel with Publisher name in column A and the website in column B. After sorting by website in Column B, I found that over 1000 publishers did not list a web site. Their entry in column B was a blank URL. I sorted and deleted these rows.

Also, the websites listed in column B had "WWW: " before each URL. I used a Edit > Replace to change each occurence of WWW: (with a space after it) to nothing. I had a nice list of 2339 publishers on a spreadsheet.

The last step was to write out a text file that could be copied and pasted into any members' website. The following macro (adapted from the code on page 345) handled this task nicely.

Sub WriteHTML()

On Error Resume Next

Kill "C:\PMALinks.txt"

On Error GoTo 0

Open "C:\PMALinks.txt" For Output As #1

Print #1, "Visit the websites of our fellow PMA members:<br><UL>"

For i = 2 To 2340

MyStr = "<LI><a href=""" & Cells(i, 2).Value & """>" & Cells(i, 1).Value & "</a>"

Print #1, MyStr

Next i

Print #1, "</UL>"

Close #1

End SubThe result was a text file with the name and URL of 2000+ publishers.

All of the above code was adapted from the book. When I started, I was sort of just doing a one-off program that I didn't envision running regularly. However, I can now imaging going back to the PMA website every month or so to get the updated lists of URL's.

It would be possible to put all of the above steps into a single macro.

Sub DoEverything()

Dim WSW As Worksheet

Dim WST As Worksheet

Set WSW = Worksheets("Workspace")

Set WST = Worksheets("Template")

On Error Resume Next

Kill "C:\AutoLinks.txt"

On Error GoTo 0

Open "C:\PMALinks.txt" For Output As #1

Print #1, "Visit the websites of our fellow PMA members:<br><UL>"

For m = 1 To 27

Select Case m

Case 27

MyStr = "1"

Case Else

MyStr = Chr(64 + m)

End Select

MyName = "Query" & m

ConnectString = "URL;http://www.pma-online.org/scripts/showmemlist.cfm?letter=" & MyStr

' On the Workspace worksheet, clear all existing query tables

For Each QT In WSW.QueryTables

QT.Delete

Next QT

' Define a new Web Query

Set QT = WSW.QueryTables.Add(Connection:=ConnectString, Destination:=WSW.Range("A1"))

With QT

.Name = MyName

.FieldNames = True

.RowNumbers = False

.FillAdjacentFormulas = False

.PreserveFormatting = True

.RefreshOnFileOpen = False

.BackgroundQuery = False

.RefreshStyle = xlInsertDeleteCells

.SavePassword = False

.SaveData = True

.AdjustColumnWidth = True

.RefreshPeriod = 0

.WebSelectionType = xlSpecifiedTables

.WebFormatting = xlWebFormattingAll

.WebTables = "7"

.WebPreFormattedTextToColumns = True

.WebConsecutiveDelimitersAsOne = True

.WebSingleBlockTextImport = False

.WebDisableDateRecognition = False

.WebDisableRedirections = False

End With

' Refresh the Query

QT.Refresh BackgroundQuery:=False

' Next, loop through all of the hyperlinks in the resulting page

For Each cll In WSW.UsedRange.Cells

For Each hl In cll.Hyperlinks

MyURL = hl.Address

' Build a web query on WST

ConnectString = "URL;" & MyURL

MyName = "Query" & NextRow

' Define a new Web Query

Set QT = WST.QueryTables.Add(Connection:=ConnectString, Destination:=WST.Cells(1, 1))

With QT

.Name = MyName

.FieldNames = True

.RowNumbers = False

.FillAdjacentFormulas = False

.PreserveFormatting = True

.RefreshOnFileOpen = False

.BackgroundQuery = False

.RefreshStyle = xlOverwriteCells

.SavePassword = False

.SaveData = True

.AdjustColumnWidth = True

.RefreshPeriod = 0

.WebSelectionType = xlSpecifiedTables

.WebFormatting = xlWebFormattingNone

.WebTables = "5"

.WebPreFormattedTextToColumns = True

.WebConsecutiveDelimitersAsOne = True

.WebSingleBlockTextImport = False

.WebDisableDateRecognition = False

.WebDisableRedirections = False

End With

' Refresh the Query

QT.Refresh BackgroundQuery:=False

' Change from a live query to values

WST.Cells(1, 1).Resize(40, 2).Value = WST.Cells(1, 1).Resize(40, 2).Value

For Each QT In WS.QueryTables

QT.Delete

Next QT

' Find URL

ThisPub = WS.Cells(5, 8).Value

ThisURL = "WWW: http://"

For j = 13 To 20

CheckIt = WS.Cells(j, 8).Value

If Left(CheckIt, 3) = "WWW" Then

ThisURL = CheckIt

End If

Next j

If Not ThisURL = "WWW: http://" Then

' write a record to the .txt file

MyStr = "<LI><a href=""" & ThisURL & """>" & ThisPub & "</a>"

Print #1, MyStr

End If

Next hl

Next cll

Next m

Print #1, "</UL>"

Close #1

End SubExcel and VBA provided a quick alternative to individually visiting thousands of web pages. In theory, the PMA should have been able to query their database and provide this information far more quickly than using this method. However, sometimes you are dealing with someone who is uncooperative or possibly doesn't know how to get data out of a database that someone else wrote for them. In this case, a bit of VBA macro code solved our problem.